Spark SQL Connector

Overview#

Using Osm4scala Spark SQL Connector, reading OSM Pbf file from PySpark, Spark Scala, SparkSQL or SparkR is

so easy as writing .read.format("osm.pbf").

The current implementation offers:

- Spark 2 and 3 versions, with Scala 2.11 and 2.12

- Full Spark SQL integration.

- Easy schema.

- Internal optimizations, like:

- Transparent parallelism reading multiply pbf files.

- File splitting to increase parallelism per pbf file.

- Pushdown required columns.

The library is distributed via Maven Repo in two different flavours:

- All in one jar to be able to use directly with all dependencies

- As plain scala dependency.

Options#

This is the list of options available when creating a dataframe:

| Option | default | possible values | description |

|---|---|---|---|

| split | true | true/false | If false, Spark will not split pbf files, so parallelization will be per file. |

Ex. from the Spark Shell:

Schema definition#

The Dataframe Schema used is the following one:

Where the column type could be:

| value | meaning |

|---|---|

| 0 | Node |

| 1 | Way |

| 2 | Relation |

Where the column relationType could be:

| value | meaning |

|---|---|

| 0 | Node |

| 1 | Way |

| 2 | Relation |

| 3 | Unrecognized |

All in one jar#

Usually, osm4scala is used from the Spark Shell or from a Notebook. For these cases, to simplify the way to add the connector as dependency, you have a shaded fat jar version with all dependencies that are necessary. The fat jar is near 5MB, so the size should be not a problem.

As you probably know, Spark is base in Scala. Different Spark distributions are using different Scala versions. This is the Spark/Scala version combination available for latest release v1.0.11:

| Spark Branch | Scala | Packages |

|---|---|---|

| 2.4 | 2.11 | com.acervera.osm4scala:osm4scala-spark2-shaded_2.11:1.0.11 |

| 2.4 | 2.12 | com.acervera.osm4scala:osm4scala-spark2-shaded_2.12:1.0.11 |

| 3.0 / 3.1 | 2.12 | com.acervera.osm4scala:osm4scala-spark3-shaded_2.12:1.0.11 |

Although following sections are focus on Spark Shell and Notebooks, you can use the same technique in other situations where you want to use the shaded version.

Why a fat shaded library?#

Osm4scala has a transitive dependency with Java Google Protobuf library. Spark, Hadoop and other libraries in the ecosystem are using an old version of the same library (currently v2.5.0 from Mar, 2013) that is not compatible.

To solve the conflict, I published the library in two fashion:

- Fat and Shaded as

osm4scala-spark[2,3]-shadedthat solvescom.google.protobuf.**conflicts. - Don't shaded as

osm4scala-spark[2,3], so you can solve the conflict on your way.

Spark Shell#

Start the spark shell as usual, using the

--packagesoption to add the right dependency. The dependency will depend to the Spark Version that you are using. Please, check the reference table in the previous section.ScalaPySparkSQLCreate the Dataframe using the osm.pbf format, pointing to the pbf file or folder containing pbf files.

ScalaPySparkSQLUse the created dataframe as usual, keeping in mind the schema explained previously.

In the next example, we are going to count the number of different primitives in the file. As explained in the schema, 0 are nodes, 1 ways and 2 relations.

ScalaPySparkSQLIn this other examples, we are going to extract all traffic lights as POIs.

ScalaPySparkSQL

Notebook#

There are different notebooks solutions in the market and each one is using a different way to import libraries. But after importing the library, you can use the osm4scala connector in the same way.

For this section, we are going to use Jupyter Notebook and JupyterLab.

note

If you can not access to a Jupyter Notebook installation, you can use jupyter/all-spark-notebook Docker image as I will do. Here, full documentation about how to install and use it.

To start the docker image, as easy as running the docker image and use the link provided:

If you prefer an online option, you can try MyBinder.

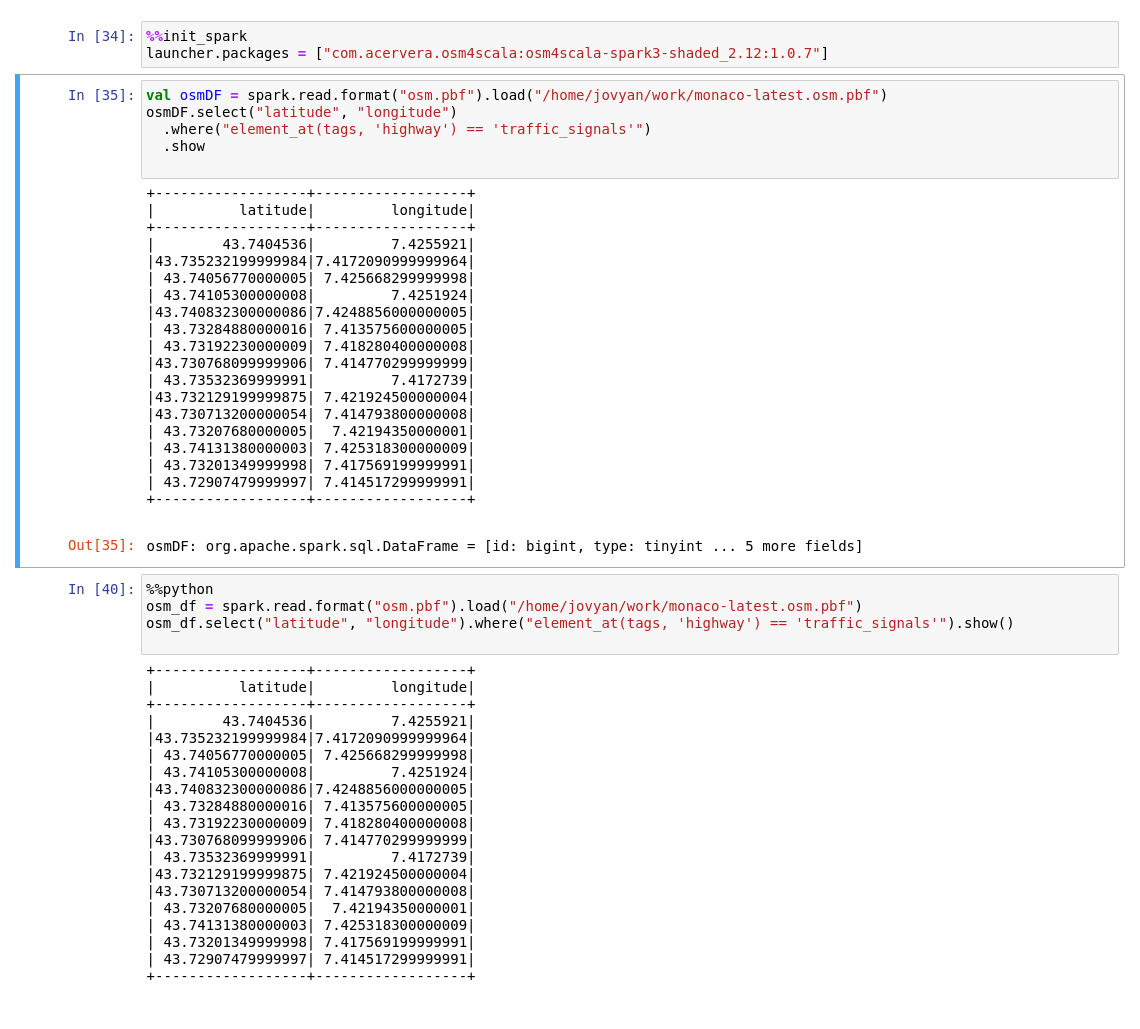

Create a new Notebook. For Scala, we are going to use the

spylon-kernel.Add a new Cell and import the right osm4scala library for your Notebook installation, following the table at the start of the All in one jar section. In or case, the version used is

Spark v3.1.1withScala 2.12.If you did not execute anything before, running the cell will start the Spark session. Sometime, depending to the Notebook used, you will need to restart the Spark session (or Kernel session).

From the previous step, you can start creating dataframes from pbf files as we did before in previous sections.

Let's suppose that you uploaded a file called

monaco-anonymized.osm.pbfinto the notebook'sworkfolder.If you create a new Cell with next content, you will get all traffic signals in Monaco.

Scalanote

Pay attention to the first line in the Python Cell. Because the kernel used is using

Scalaas default, you need to add the%%pythonheader.Next, a screenshot with the output generated:

Of course, from the dataframe you can create beautiful maps, graphs, etc. But that is out of the scope of this documentation.

Spark application#

When we need to write more complex analysis, data extractions, ETLs, etc, it is necessary to write Spark applications.

Import the spark connector it is not really necessary because the integration is transparent.

Only two possible advantages (not available if using Python) are:

The use of static constants, for example, to avoid

magic numbersfor primitive and relation types.Using the library as part of Unit Testing or Integration Testing.

Adding osm4scala jar library as part of the deployable artifact.

For Python, like in Scala, it is not necessary to import the library except in runtime. But unlike in Scala, you can not easily to import and use facilities from the Scala library. So in this case, you can jump to the next step.

SbtMavenReduce artifact size.

The shaded dependency is near 5MB. You can add this dependency as a package when you submit the job instead to include it in the deployable artifact generated. To do it, set the scope dependency as

TestorProvided.If you don't know what I'm talking about, don't pay too much attention and forget it. 😉

Create the Dataframe using the osm.pbf format, pointing to the pbf file or folder containing pbf files, and use as usual.

Scala / PrimitivesCounter.scalaPySpark / PrimiriveCounter.pySubmit the application to your Spark cluster.

ScalaPySparkOptional --packages.

You will not need to add

--packages 'com.acervera.osm4scala:osm4scala-spark3-shaded_2.12:1.0.11'if it is part of the deployed artifact.

More Examples#

Following, more examples. This time, we will create a SQL temporal view and SQL:

Plain (non-shaded jar) dependency.#

Sometimes we need to write more complex applications, analysis, data extractions, ETLs, integrate with other libraries,

unit testing, etc.

In that case, the best practice is to manage dependencies using sbt or maven, instead to import the shaded file.

OSM Pbf files are based on Protocol Buffer, so Scalapb is used as deserializer so it's the unique transitive dependency.

This is the Spark/Scala version combination available for latest release v1.0.11:

| Spark branch | Scalapb | Scala | Packages |

|---|---|---|---|

| 2.4 | 0.9.7 | 2.11 | com.acervera.osm4scala:osm4scala-spark2_2.11:1.0.11 |

| 2.4 | 0.10.2 | 2.12 | com.acervera.osm4scala:osm4scala-spark2_2.12:1.0.11 |

| 3.0 / 3.1 | 0.10.2 | 2.12 | com.acervera.osm4scala:osm4scala-spark3_2.12:1.0.11 |

After importing the connector, you can use it as we explained in the All in one section. So lets see how to import the library in our project and few examples.

Resolving dependency conflicts#

Osm4scala has a transitive dependency with Java Google Protobuf library. Spark, Hadoop and other libraries in the ecosystem are using an older version of the same library (currently v2.5.0 from Mar, 2013) that is not compatible.

To be able to resolve this conflicts, you will need to shade your deployed jar. The conflict comes from the package com.google.protobuf.

Following, how to do it using SBT:

It is possible to do the same using the shade maven plugin.